The buzz around AI is intense. These super assistants can generate reports, executive summaries, proposals—even strategies for your business. The rise of generative artificial intelligence is transforming how organizations create and use information. But this tech revolution also introduces new layers of risk. For cybersecurity experts and risk managers, the complexity of challenges has just increased.

Explosion of Unstructured Data

Using generative AI—like Microsoft Copilot in collaborative environments—rapidly increases the volume of unstructured data. Currently, around 90% of generated data is unstructured (texts, videos, images), and this proportion keeps growing with AI-driven content creation. Continuous content generation—reports, documents, real-time conversations—contributes heavily to this data surge.

Experts estimate that the volume of unstructured data could grow by 28% annually, doubling every two to three years in certain industries. This presents major challenges in data management, including storage, security, and effective use in analytics systems.

Organizations must deploy robust strategies to handle this growing volume of potentially sensitive data. Cybersecurity solutions tailored to this reality must be implemented swiftly to optimize usage and avoid costly breaches and privacy incidents.

Key Concepts

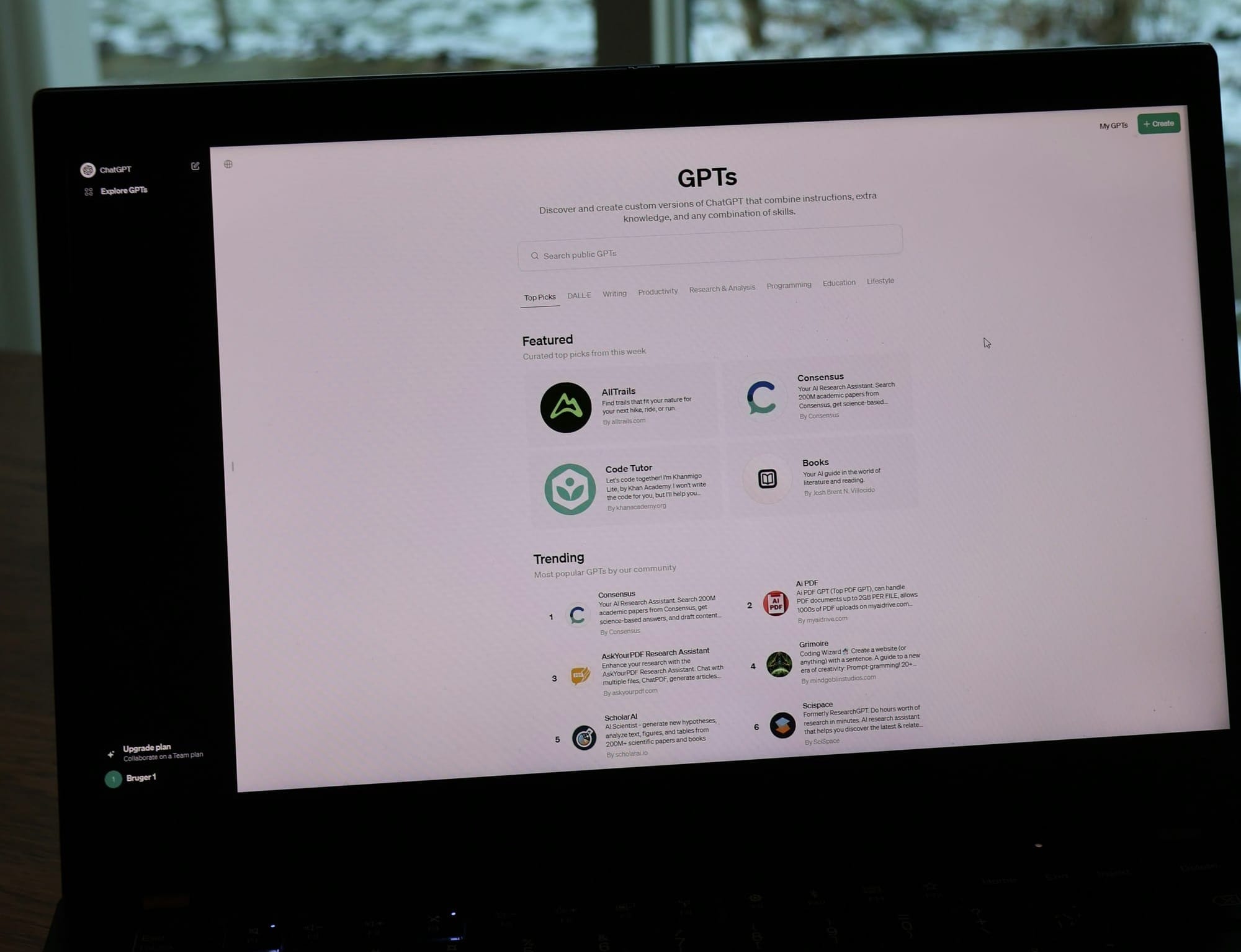

Before diving deeper into the risks related to implementing tools like Microsoft Copilot or Google Gemini, let’s review a few important AI concepts.

An AI model is a mathematical structure used to make predictions or generate content based on input data. In the case of LLMs (Large Language Models) like ChatGPT, the model is a neural network trained to understand and produce coherent text.

Parameters are internal variables in the model that get adjusted during training to improve predictions. LLMs have billions of parameters that determine how input text is interpreted and transformed into output.

Training refers to the process of adjusting these parameters using training data. In LLMs, the model learns to reduce prediction errors by analyzing examples—often using gradient descent—on powerful computing infrastructure.

A Real-World Example

Imagine a merger or acquisition project requiring a report containing IP, sensitive personal information, and critical financial data.

With AI, much of this process can be automated. For example, Copilot can transcribe meetings, summarize key points, and insert them into a report. It can also analyze complex datasets, suggest relevant tables, and generate summaries and recommendations based on strategic objectives.

Before responding to a query, tools like Copilot enrich the prompt with user-specific context—a process called grounding. This ensures that the AI’s output is relevant by searching through documents, emails, and resources available to the user. Grounding invisibly injects enterprise-specific data into the AI’s prompt, which might be reflected in the output.

After the AI generates a draft, the post-processing phase refines it—filtering sensitive info (if configured correctly), adapting tone and formatting to professional standards.

Risks to Manage

Security teams already struggle with discretionary access, where users share documents or grant access in Teams to colleagues or partners. AI exacerbates this challenge by making information easier to create, share, and retrieve—often beyond traditional access control capabilities.

AI can access any document the user can, and it’s far better at finding relevant ones. That means poorly managed access increases the risk of sensitive data exfiltration. Malicious actors will find it easier to exploit weaknesses.

Legal and Regulatory Impact

New laws like Quebec’s Law 25 and Canada’s Bills C-26 and C-27 impose strict data protection standards. These laws make it mandatory—not optional—to properly manage unstructured information.

The ability of LLMs to integrate accessible data into contextual responses raises the risk of unintentional disclosure. Regulatory compliance is no longer a best practice—it’s a legal obligation for business leaders.

The Urgency of Action

Discretionary access must no longer be handled casually. AI tools like Copilot can rapidly identify and retrieve sensitive documents, increasing the risk of leakage.

Access management must be systematic, automated, and embedded in cybersecurity strategy.

Costly Mistakes

Business leaders must understand the dual nature of AI: it’s both a powerful accelerator and a source of new vulnerabilities.

On one hand, generative AI enhances competitiveness by automating tasks and improving collaboration. On the other, it expands the attack surface—especially for unstructured data. Neglecting this risk can lead to costly financial and legal consequences.

A proactive, rigorous approach is essential: secure sensitive data, enforce structured access controls, and fully leverage AI’s potential. The key to long-term resilience is striking the right balance between innovation and protection.